LoBoS History

This is a detailed journey through how LoBoS came to be.

The Early Years

The first Beowulf cluster was developed by a group including Donald Becker, Thomas Sterling, Jim Fischer, and others at NASA's Goddard Space Flight Center in 1993-1994. The aim of the Beowulf project was to provide supercomputing performance at a fraction of the traditional cost. This was made possible by two recent developments in technology: firstly, the introduction of cheap Intel and Intel clone microprocessors that could perform respectably compared to DEC's Alpha CPU, Sun's SPARC and UltraSPARC lines, and other high performance CPUs, and secondly, the availability of capable open-source operating systems, most notably Linux. The Beowulf project was a success and spawned a variety of imitators at research insitutions that wanted supercomputing power without paying the price.

The original iteration of LoBoS was conceived by Bernard Brooks and Eric Billings in the mid 1990s, in an attempt to use the architecture developed by the NASA group to advance the cost effectiveness of molecular modeling. The first LoBoS cluster was constructed between January and April of 1997, and remained in use until March, 2000. This cluster used state of the art (at the time) hardware. The network topology was a ring (each node having three NICs, see the LoBoS 1 in LoBoS versions page for more details) that was joined to the NIH campus network by a pair of high-speed interconnects. This cluster was able to take advantage of the recent parallelization of computational chemistry software such as CHARMM. It was made available to collaborating researchers at NIH and other institutions.

Physical view of LoBoS 1

LoBoS through the Years

The LoBoS cluster, like the original Beowulf, proved to be a success. Researchers at NIH and collaborating institutions used it to develop large-scale parallel molecular modeling experiments and simulations. By 1998, however, the original cluster, whose nodes contained dual 200 MHz Pentium Pro processors, was becoming obsolete. A second cluster, LoBoS 2, was therefore constructed consisting of nodes with dual 450 MHz Intel Pentium II processors. This cluster also abandoned the ring network topology for a standard ethernet bus. The cluster had both fast and gigabit ethernet connections, a rarity for the late 1990s. As this was happening, the original LoBoS cluster was converted for desktop use. This represented another advantage of the LoBoS business model, as machines could be converted for other uses when newer technology became available for the cluster.

With the second incarnation of LoBoS, demand for cluster use continued to increase. To provide NIH and collaborating researchers with a top of the line cluster environment, the Cluster 2000 committee was chartered to build a combined LoBoS 3/Biowulf cluster. This committee evaluated several different options for processors, network interconnections, and other technologies.

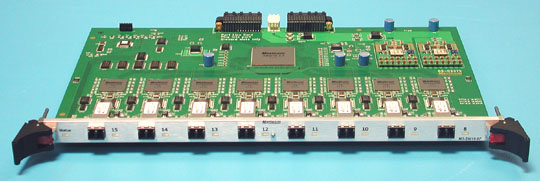

Despite the existence of LoBoS 3/Biowulf, the CBS staff decided to construct a LoBoS 4 cluster. This cluster used nodes with a dual AMD Athlon MP configuration. LoBoS 4 also added Myricom's proprietary high speed, low latency fiber network technology, called Myrinet. Myrinet gave a significant performance improvement to parallel applications. With this cluster, the CBS staff ran into power and reliability problems. Although they were mostly fixed, most of the nodes in LoBoS 4 were returned to their vendor as trade-ins for LoBoS 5 nodes. LoBoS 5, which was completed in December, 2004, was an evolutionary development from LoBoS 4, featuring nodes with dual Xeon processors and expanded use of Myrinet technology.

As LoBoS 5 began to age, plans were made for the construction of LoBoS 6, the first version of LoBoS to use 64 bit CPUs. The first batch of nodes, 52 dual dual-core Opteron systems, were brought online in late summer of 2006. The next batch of systems are 76 dual quad core Intel Clovertown nodes, which are currently being brought on-line. The Opteron nodes are connected with high-speed single data rate (SDR; 10 Gbps) and the Clovertown nodes use double data rate (DDR; 20 Gbps) InfiniBand interconnects.

LoBoS 7 showed up after a gradual replacement of the compute nodes and the infiniband switches for newer version and it supposed a big expansion of the LoBoS.

The EonStor RAID arrays provide disk space for the LoBoS 5 cluster.

The Future

Thanks to the construction of a new data center, LoBoS has substantial capacity for expansion. The Laboratory of Computational Biology is considering several options for new nodes, with a focus on GPU and multi-GPU computing.

LoBoS Previous Versions

LoBoS 7

LoBoS 7 is a previous, 64-bit version of LoBoS:

Westmere Nodes

| Equipment | Notes |

|---|---|

| 96 compute nodes |

|

| InfiniBand hardware |

|

Sandy Bridge Nodes

| Equipment | Notes |

|---|---|

| 72 compute nodes |

|

| InfiniBand hardware |

|

Nehalem Nodes

| Equipment | Notes | |

|---|---|---|

| 156 compute nodes |

|

|

| 1 master node |

|

|

| InfiniBand hardware |

|

LoBoS 6

LoBoS 6 is another previous, 64-bit version of LoBoS:

Opteron Nodes

| Equipment | Notes |

|

|---|---|---|

| 52 compute nodes |

|

|

| 1 master node |

|

|

| InfiniBand hardware |

|

Clovertown Nodes

| Equipment | Notes |

|

|---|---|---|

| 76 compute nodes |

|

|

| InfiniBand hardware |

|

Harpertown Nodes

| Equipment | Notes |

|

|---|---|---|

| 228 compute nodes |

|

|

| InfiniBand hardware |

|

LoBoS 5

With the unreliability of the motherboards in LoBoS 4, the Computational Biophysics Section staff decided to switch back to Intel CPUs with Supermicro motherboards. As the Pentium 4 processor was not designed for multiprocessor operations, LoBoS 5 nodes were delivered with dual Intel Xeon processors. Approval for the cluster was granted in June, 2003 and LoBoS 5 was installed in stages between March and December of 2004. The first batch of 88 nodes were equipped with 2.66 GHz Xeons. All subsequent nodes have dual 3.06 GHz processors.

With 190 nodes now in operation, the LoBoS cluster has reached the limits of the physical space available to it on campus. A new, off-campus computer room has been constructed and work is underway to build out LoBoS 6.

| Equipment | Notes |

|---|---|

| 190 compute nodes |

|

| 2 master nodes |

|

| Myrinet switching hardware | The Myrinet switching hardware from LoBoS 4 was retained. In addition, a second, identical switch and line cards were added. |

LoBoS 5 nodes.

LoBoS 4

With the combination of LoBoS-3 and Biowulf, there was a need to upgrade the LoBoS 2 cluster which was, by 2001, showing its age. The new cluster was designed with 70 compute nodes. In a departure from previous clusters, these nodes would use AMD CPUs instead of Intel chips. LoBoS 4 also saw the deployment of Myrinet, a proprietary high-bandwidth, low-latency data link layer network technology in the LoBoS cluster.

Unfortunately, these nodes had reliability problems. In particular, the motherboards proved problematic, requiring frequent reboots, which put undue stress on the power supplies, which in turn failed at a much higher than expected rate. In addition there were problems with ensuring sufficient power and cooling for the nodes, although these issues were finally resolved. However, because of the component failures, the LoBoS 4 nodes were returned to their vendor in exchange for a discount on some of the nodes that would eventually become LoBoS-5.

| Equipment | Notes |

|---|---|

| 70 Compute Nodes |

|

| 4 master nodes |

|

| Myrinet switching hardware |

|

A Myrinet M3-SW16 line card.

LoBoS 3

With the success of the first two incarnations of LoBoS, there was an increase in interest, both at NIH and other institutions, in using Beowulf clusters to conduct biochemical research. To meet the challenges posed by this new avenue of research and the increasing demand for cluster resources, CIT and NHLBI at NIH decided to combine forces to design a new cluster. The Cluster 2000 committee was therefore chartered to conduct the design work. The result of this collaboration was a combined LoBoS 3 and Biowulf cluster.

Biowulf itself is maintained by the same organization as the NIH Helix systems.

LoBoS nodes installed as part of Biowulf.

LoBoS 2

After the success of the initial LoBoS implementation, the Computational Biophysics Section decided to create a second cluster using more modern (at the time) hardware. Eric Billings once again took the lead of designing and implementing the cluster. Of particular note is the abandonment of the relatively inefficient ring topology for a standard fast ethernet bus topology. A gigabit uplink provided high speed networking outside the cluster's immediate environment. LoBoS 2 was built in July and August of 1998, and was used from October 1998 to January, 2001. It was completely converted to desktop use by June, 2001.

| Equipment | Notes |

|---|---|

| 100 compute nodes |

Full node specifications:

|

| 4 Master Nodes |

Note: These master nodes were upgraded for use with LoBoS 4. |

LoBoS 2 nodes installed. Note the fiber-optic gigabit ethernet connections.

LoBoS 1

The original LoBoS was used between June of 1997 and March of 2000. Its nodes were handed back for use as desktop machines between September of 1999 and March, 2000.

| Equipment | Notes |

|---|---|

| 47 compute nodes |

|

| 4 Master Nodes |

|

A diagram of the original LoBoS ring topology.

References

Biowulf Staff. NIH Biowulf home page.